Featured Content

Data Strategy: Building The Foundation of a Data-Driven Enterprise (13-14 November 2024, Italy Livestream Training)

Smart Infrastructure & Smart Applications for the Smart Business – Infrastructure & Application Performance Monitoring

Data Catalogs – Governing & Provisioning Data in a Data Driven Enterprise (16 October 2024, Stockholm)

Data Catalogs: Governing & Provisioning Data in a Data Driven Enterprise (12 December 2024, Italy Livestream Training)

Blog

Pervasive Rush To Take On The Challenge of Scalable Data Integration

As a member of the Boulder BI Brain Trust (BBBT), I sat in on a session given by Pervasive Software Chief Technology Officer (CTO) and Executive Vice President Mike Hoskins last week. The session started out covering Pervasive financial performance of $47.2 million revenue (Fiscal 2010) with 38 consecutive quarters of profitability before getting into the technology itself. Headquartered in Austin, Pervasive offer their PSQL embedded database, a data an application exchange (Pervasive Business Xchange) as well as their Pervasive Data integrator and Pervasive Data Quality products which can connect to a wide range of data sources using their Pervasive Universal Connect suite of connectors. They also offer a number of data solutions. Pervasive has has success in embedding its technology in ISV offerings and in SaaS solutions on the Cloud. However, what caught my eye in what was a very good session was their new scalable data integration engine DataRush.

I have had concerns for some time about how data integration tools are going to step up to the challenge of big data. We are already in the era were hundreds of Terabytes and even Petabytes are a reality in data warehouses. Also the volume of data needed for web analytics is massive let alone the tsunami of data being emitted by sensors that is coming over the horizon (if that data ever makes it into a data warehouse we really are going to re-define large). There is no doubt that the future is constantly on the up in terms of volumes of data and the number of data sources that the businesses need to integrate data from. We all talk about how important data warehouse appliances, columnar compression and scalable MPP databases are to handle large data volumes. But what about data integration? There is not much point having the ability to manage big data in databases if we can’t get the data in there in the first place. So data integration vendors have to step up to this challenge. Of course several already have. Many products on the market have offered pipeline parallelism during ETL processing for many years. We have also seen many vendors switching to an ELT model for better performance so that they can exploit parallel SQL in the target DBMS engine to deal with the problem. That of course makes the data integration engine dependent on the parallel DBMS. This is fine as long as the data integration workload can be fenced off and managed separately from query processing workloads by a DBMS workload manager. But is that it? What about the fact that these days modern hardware consists of multi-processor multi-core systems. Can a data integration engine itself not exploit this without relying on a DBMS? Is there any way to get more bang for your buck on this kind of hardware?

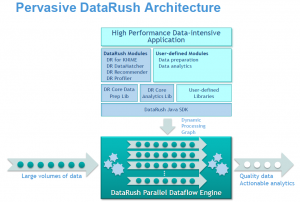

Step in Pervasive DataRush. This new engine from Pervasive is designed from the ground up as an MPP data integration engine that can exploit every core on a multi-core, multi-processor server. This means that you might potentially avoid the need to go to clustered hardware because you can scale up before you need to scale out. The DataRush architecture is shown below

What is interesting about this is not just that it exploits multiple cores in the DataRush engine but that it also has an analytics library and other plug-in modules. The one that caught my eye was the DataRush Recommender module. It strikes me that this engine (which can also be extended to support user defined libraries) not only has the capability to integrate data in parallel but it can also analyse that data using analytical models (data mining models) at the same time. Couple that with the DataRush Recommender module and we are bordering on complex event processing (CEP). It seems we just need a rules engine in there and also of a sudden we are into massively parallel CEP. Given that data integration is already rules driven it certainly looks to me that this product could potentially go well beyond just doing integration in parallel as important as that need is. Pervasive has also made their products available in a PaaS offering on the Amazon EC2 Cloud as well as offering them on-premise which means that they can integrate and clean data from inside and outside the enterprise. Given that you can also embed the technology it is certainly worth a look. I plan to cover it in more detail in my up and coming Enterprise Data Governance and Master Data Management class running in London on September 22-24

Register for additional content

Register today for additional and exclusive content - informative research papers, product reviews, industry news.